Quarkus Cache

This is a deep dive into the world of caching with Quarkus. We'll celebrate its incredible simplicity, but more importantly, we'll expose the critical trap that snares so many developers: the difference between a local cache and a distributed one. Let's learn how to wield this power correctly.

The Magic Wand: Caching with a Single Annotation

Let's start with a classic scenario: a weather service that makes a slow, 2-second network call to get a forecast. If three users request the same forecast, that's 6 seconds of wasted time and resources.

@ApplicationScoped

public class WeatherForecastService {

// This method is painfully slow

public String getDailyForecast(LocalDate date, String city) {

try {

Thread.sleep(2000L); // Simulate slow external service call

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

return Forecast for + city + on + date;

}

}Now, let's apply the magic. We add the quarkus-cache extension and sprinkle one annotation on our method.

import io.quarkus.cache.CacheResult;

@ApplicationScoped

public class WeatherForecastService {

@CacheResult(cacheName = weather-cache)

public String getDailyForecast(LocalDate date, String city) {

// ... same slow logic as before

}

}What just happened? With @CacheResult, Quarkus now does the following:

- The first time

getDailyForecastis called with a specific date and city (e.g., `2024-10-27`, `London`), it executes the slow method. - It then stores the return value in a cache named `weather-cache`, using the method parameters as a key.

- The second time (and every time after) the method is called with the exact same parameters, Quarkus finds the result in the cache and returns it instantly, without ever executing the slow logic again.

The first request takes 2 seconds. Every subsequent identical request takes milliseconds. It's brilliant.

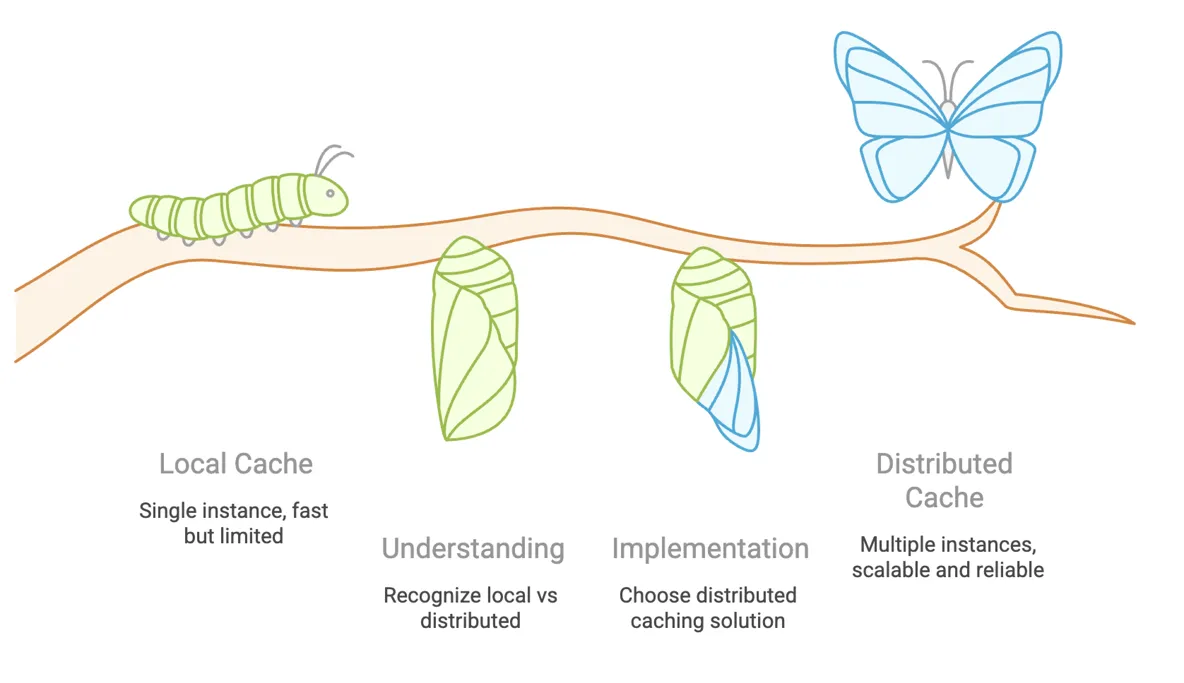

The Ticking Time Bomb: The Single-Instance Trap

Everything works perfectly on your local machine. You deploy your application. To handle the load, you scale it to two instances (or pods, in Kubernetes). And now, you've unknowingly armed a time bomb.

The default cache provider in Quarkus is Caffeine. It's an incredibly fast, in-memory cache. The key words there are in-memory. This means each instance of your application has its own, completely separate cache. They do not talk to each other. They do not share anything.

Now, let's consider cache invalidation. Suppose we have an endpoint to update a user's profile. We want to clear the old profile from the cache so the next request fetches the new data. We use the @CacheInvalidate annotation.

@ApplicationScoped

public class UserService {

@CacheResult(cacheName = user-profiles)

public UserProfile getProfile(String userId) {

// ... slow database call ...

}

@CacheInvalidate(cacheName = user-profiles)

public void updateProfile(@CacheKey String userId, UserProfile newData) {

// ... update database ...

}

}Here is the catastrophic failure scenario in a scaled environment:

- A user's browser requests their profile. The load balancer sends the request to Instance A. It fetches the profile from the database and caches it in its local memory.

- The user updates their profile. The load balancer sends this

updateProfilerequest to Instance B. - Instance B updates the database and dutifully invalidates the entry for that user... in its own local cache. Instance A knows nothing about this.

- The user refreshes their profile page. The load balancer, seeking to distribute load, sends the request back to Instance A.

- Instance A finds the user's profile in its local cache and instantly returns the stale, outdated data.

Let this be burned into your memory: If your application runs on more than one instance, the default in-memory cache is not just a feature; it's a bug waiting to happen. Cache invalidation will fail, and you will serve stale data.

The Real Solution: Distributed Caching

The only way to solve this problem is to use a cache that lives outside your application instances—a centralized, distributed cache. All your application instances connect to this single, shared cache service. When Instance B invalidates an entry, it's removed from the central cache, and Instance A will see that it's gone on the next request.

Quarkus has first-class support for popular distributed caches like Redis and Infinispan. The best part? Your application code (@CacheResult, @CacheInvalidate) doesn't have to change at all! You just add a new extension and configure it.

To switch to a Redis-backed cache, you would:

- Add the Redis cache extension:

quarkus extension add cache-redis - Configure the connection to your Redis server in

application.properties.

# application.properties

# Tell Quarkus to use the Redis cache backend for this specific cache

quarkus.cache.redis.user-profiles.hosts=redis://localhost:6379

# (Optional) Set a default TTL for entries in this cache

quarkus.cache.redis.user-profiles.expire-after-write=10MThat's it. Now, when your UserService methods are called, Quarkus will automatically use Redis instead of the local Caffeine cache. Your invalidation problem is solved.

Quarkus caching is an incredibly powerful tool for boosting performance. The annotation-based API is a joy to use. But this power demands responsibility. Always ask yourself: "Will this application ever run on more than one instance?"

- If the answer is no (e.g., a simple utility, a single-container service), the default in-memory cache is fantastic.

- If the answer is yes or even maybe, you must plan for a distributed cache from the start. It will save you from a world of pain, debugging, and angry users wondering why their data isn't updating.

- For more details, explore the Quarkus Caching Guide and the specific guides for the Redis and Infinispan backends.