DeepSeek Released V3.2 Model Lineup Capable of Solving International Programming and Mathematics Olympiads "for a Gold Medal"

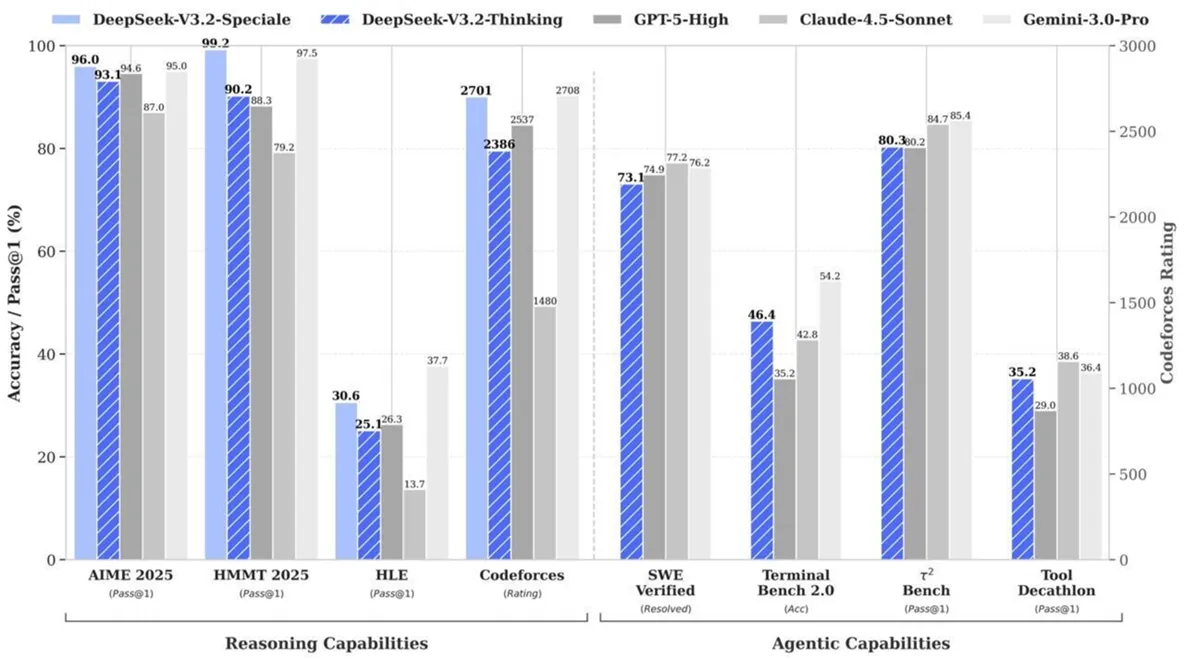

AI startup DeepSeek has released two new models, V3.2 and V3.2 Speciale, claiming they match the performance of unreleased industry giants like GPT-5 and Gemini 3 Pro. Both models are highly optimized for complex, agent-based tasks.

DeepSeek-V3.2: The Accessible Powerhouse

DeepSeek-V3.2 Speciale: The Expert Performer

- Outperforms Google's Gemini 3 Pro (preview) in mathematical reasoning.

- Demonstrated its advanced capabilities by solving problem sets from the International Mathematical Olympiad (IMO), ICPC World Finals, and the International Olympiad in Informatics (IOI).

- Available exclusively to developers through the official API.

Open Access to Model Weights

In a move promoting transparency and community development, DeepSeek has released the weights for both massive 685-billion-parameter models on Hugging Face: DeepSeek-V3.2 and DeepSeek-V3.2 Speciale.